Here’s a basic question: If pollsters were so wrong, as we’ve learned on that fateful election night, then why do the final pre-election forecasts more closely match the post-election exit polls? The reported vote results in this election often diverge significantly from the exit polls, and this is especially the case concerning states that surprisingly flipped expectations, such as Wisconsin, North Carolina, and Pennsylvania.

Now, the general claim is that the pollsters were wrong, but that raises a question as to why the exit poll results fall within the margin of error for the final elections. In the following, I compare the results from Nate Silver’s FiveThirtyEight final election poll forecasts with CNN’s published exit poll results (reported from TDMS Research); these are the results that are not adjusted to forcibly match the vote results. The comparison reveals that the FiveThirtyEight’s poll forecasts are significantly closer to the exit poll results, as the majority are within the margin of error, while the reported vote results diverge significantly beyond the margin of error of those forecasts as well as from the exit poll results.

Several journalists have noted the significant discrepancies between the exit poll results and the reported vote count results. The discrepancies for certain states even ranged from 3.2% to 6.1% off the exit poll results, which reaches well beyond the margin of error, and simply raises a question that deserves clear answers for the electorate. In each case of discrepancy, there was a “red shift” for Trump. Notably, these occurred in battleground states, causing the final tally to tilt in Trump’s favor, lifting him over 270. These discrepancies alone raise some questions that should be plainly addressed. Yet, pre-election final polls provide another check upon the system. In a sense, we have two lines of evidence concerning voter opinion: those from the final days before the election as well as the immediate post-election exit poll results. What often goes awry is the reported vote results in between. There is remarkable consistency between the final election poll results and the exit poll results, as shown in the tables below.

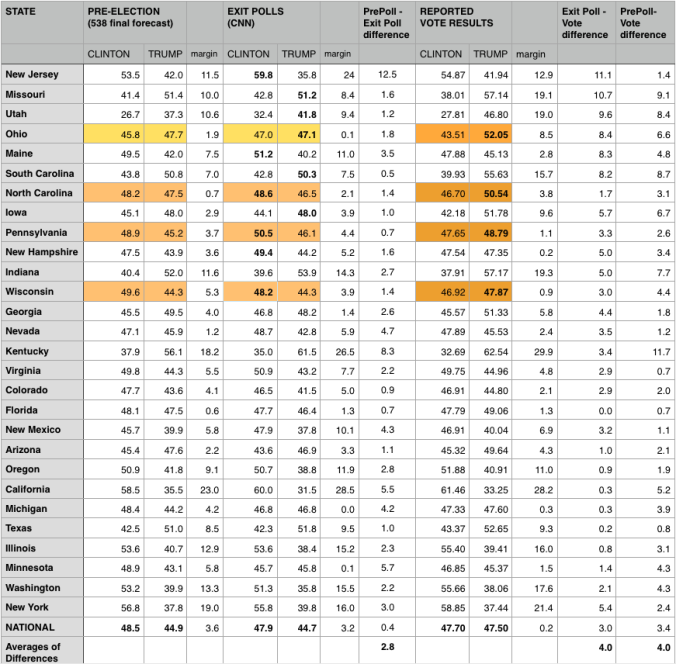

Here are the final forecasts by FiveThirtyEight and the exit poll results reported by CNN, along with the reported vote tallies, which reveal significant disparities (Table 1).

Table 1. Table of results concerning states with exit poll results compared to final preliminary polls and reported vote counts.

In Table 1, the results indicate that FiveThirtyEight’s prediction are more closely matched to the exit poll results, at 2.8%, as opposed to the results of the reported vote count, which average 4% disparities. However, the differences occur in greater numbers only in some states, typically those battleground states in this election (this indicates that the shift to Trump did not occur across all areas, or was evenly distributed, rather the results exhibit greater disparities only in certain areas, as highlighted by TDMS Research) (Table 2).

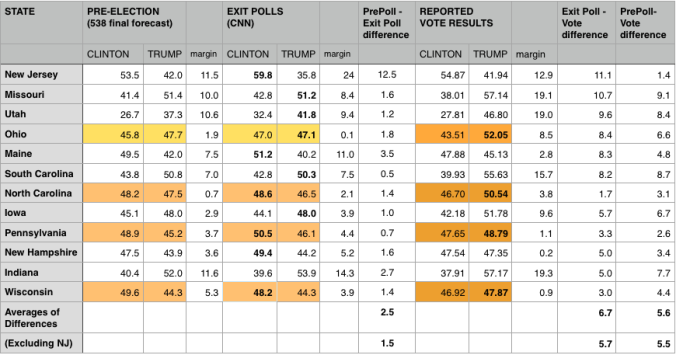

Table 2. Pre-election forecasts compared with exit poll and reported vote results for states with marked discrepancies.

In those states with marked differences between vote counts and exit poll results, the discrepancies diverge quite markedly to 6.7%. That is well beyond the margin of error of the final pre-election poll forecasts. Yet, those final pre-election forecasts by FiveThirtyEight are even tighter with the exit poll results at 2.5%; if we remove the outlier, New Jersey, the FiveThirtyEight forecasts are tight at 1.5%, well within the margin of error.

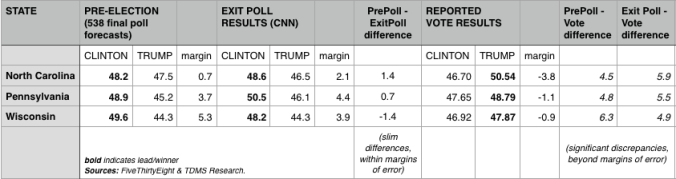

Table 3. Pre-election forecasts compared with exit poll results and reported vote results for three states, that flipped predictions and exhibited marked discrepancies.

Basically, the final polls leading up to the election are a close match to the exit polls, with differences of only ±1.4%, within an acceptable margin of error. This leads one to have confidence in the final forecasts and the exit polls; there is correspondence between the two. The striking discrepancies of the vote results to the exit polls require some explanation. Moreover, these discrepancies occurred in battleground states, resulting in a turn of expected election results, which was enough to turn the election to Trump, reaching more than 270 electoral votes.

I raise these issues in order that the question is raised as to why there are beyond-the-margin-of-error discrepancies between vote counts and exit poll results and the final election poll results. If the before-estimate matches the exit-result, why are the actual vote results so different? Further, why only in certain states, particularly those that were battleground states in this election.

Exit polls are routinely used as the primary method to validate election results in other countries. In the U.S., however, they are predominantly used only to tell narratives about how certain factions of the electorate voted (women, college students, hispanics, etc.), and the common refrain is that the exit poll results are unreliable for checking vote results. Yet, we invest immense trust in the exit polls concerning percentages of voter factions. Why don’t we also trust the exit poll percentages for the simple presidential result? Other countries do.

One answer is the polls are just entirely inaccurate. But why is this so? And, why only in certain states? Only accept an answer that is not just an opinion or a statement, but one with reasoning with evidence provided. Discrepancies highlight irregularities and these should be adequately explained. If they can’t be readily explained, then the other option is that the results may have been tampered with. If that is even a possibility, that foreign or other hackers interfered with election results, it should rise to the level of investigation. This is a democracy, and election results should be transparent and clear to all.

Sources for Pre-Election Poll Results & Exit Poll Results:

Nate Silver | 2016 Election Forecast | FiveThirtyEight

Theodore de Macedo Soares | 2016 Presidential Election Table | TDMS|Research

Pingback: “The Data Screams Fraud, But No One is Listening” | Question 2016